Deepfakes created from a single image. The method stimulated issues that premium phonies are coming for the masses. However do not get too anxious, yet.

Egor Zakharov, Aliaksandra Shysheya, Egor Burkov, Victor Lempitsky

.

Recently, Mona Lisa smiled. A huge, broad smile, followed by what seemed a laugh and the quiet mouthing of words that might just be a response to the secret that had actually seduced her audiences for centuries.

A fantastic lots of people were tense.

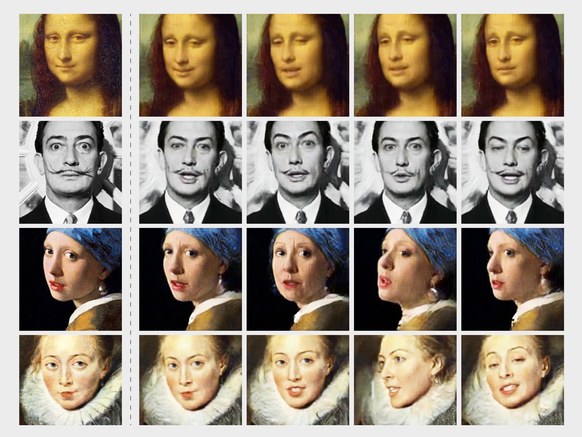

Mona’s “living picture,” together with similarities of Marilyn Monroe, Salvador Dali, and others, showed the most recent innovation in deepfakes– relatively practical video or audio created utilizing artificial intelligence. Established by scientists at Samsung’s AI laboratory in Moscow, the pictures show a brand-new technique to develop reputable videos from a single image. With simply a couple of pictures of genuine faces, the outcomes enhance considerably, producing what the authors refer to as “photorealistic talking heads.” The scientists (creepily) call the outcome “puppeteering,” a referral to how unnoticeable strings appear to control the targeted face. And yes, it could, in theory, be utilized to stimulate your Facebook profile image. However do not go nuts about having strings maliciously pulling your visage anytime quickly.

” Absolutely nothing recommends to me that you’ll simply turnkey usage this for producing deepfakes in your home. Not in the short-term, medium-term, or perhaps the long-lasting,” states Tim Hwang, director of the Harvard-MIT Ethics and Governance of AI Effort. The factors pertain to the high expenses and technical knowledge of producing quality phonies– barriers that aren’t disappearing anytime quickly.

/ Utilizing just one source image, the scientists had the ability to control the facial expressions of individuals illustrated in pictures and images.

Egor Zakharov, Aliaksandra Shysheya, Egor Burkov, Victor Lempitsky

Deepfakes initially went into the general public eye late 2017, when a confidential Redditor under the name “deepfakes” started publishing videos of celebs like Scarlett Johansson sewed onto the bodies of adult stars. The very first examples included tools that might place a face into existing video, frame by frame– a glitchy procedure then and now– and promptly broadened to political figures and TELEVISION characters. Stars are the most convenient targets, with sufficient public images that can be utilized to train deepfake algorithms; it’s reasonably simple to make a high-fidelity video of Donald Trump, for instance, who appears on TELEVISION day and night and at all angles.

The underlying innovation for deepfakes is a hot location for business dealing with things like increased truth. On Friday, Google launched a advancement in managing depth understanding in video footage– attending to, while doing so, a simple inform that plagues deepfakes. In their paper, released Monday as a preprint, the Samsung scientists indicate rapidly producing avatars for video games or video conferences. Seemingly, the business might utilize the underlying design to produce an avatar with simply a couple of images, a photorealistic response to Apple’s Memoji. The very same laboratory likewise released a paper today on producing full-body avatars.

Issues about destructive usage of those advances have actually triggered an argument about whether deepfakes might be utilized to weaken democracy. The issue is that a skillfully crafted deepfake of a public figure, maybe mimicing a rough mobile phone video so that it’s flaws are neglected, and timed for the ideal minute, might form a great deal of viewpoints. That’s stimulated an arms race to automate methods of spotting them ahead of the 2020 elections. The Pentagon’s Darpa has actually invested 10s of millions on a media forensics research study program, and numerous start-ups are angling to end up being arbiters of reality as the project gets underway. In Congress, political leaders have required legislation prohibiting their “destructive usage.”

However Robert Chesney, a teacher of law at the University of Texas, states political disturbance does not need innovative innovation; it can arise from lower-quality things, meant to plant discord, however not always to deceive. Take, for instance, the three-minute clip of Home Speaker Nancy Pelosi flowing on Facebook, appearing to reveal her drunkenly slurring her words in public. It wasn’t even a deepfake; the scoundrels had actually just decreased the video.

By lowering the variety of images needed, Samsung’s technique does include another wrinkle: “This suggests larger issues for common individuals,” states Chesney. “Some individuals may have felt a little insulated by the privacy of not having much video or photographic proof online.” Called “few-shot knowing,” the technique does the majority of the heavy computational lifting ahead of time. Instead of being trained with, state, Trump-specific video, the system is fed a far bigger quantity of video that consists of varied individuals. The concept is that the system will find out the standard shapes of human heads and facial expressions. From there, the neural network can use what it understands to control a provided face based upon just a few images– or, as when it comes to the Mona Lisa, simply one.

The technique resembles approaches that have actually reinvented how neural networks find out other things, like language, with huge datasets that teach them generalizable concepts. That’s triggered designs like OpenAI’s GPT-2, which crafts composed language so proficient that its developers chose versus launching it, out of worry that it would be utilized to craft phony news.

There are huge difficulties to wielding this brand-new method maliciously versus you and me. The system depends on less pictures of the target face, however needs training a huge design from scratch, which is costly and time consuming, and will likely just end up being more so. They likewise take competence to wield. It’s uncertain why you would wish to produce a video from scratch, instead of relying on, state, developed strategies in movie modifying or PhotoShop. “Propagandists are pragmatists. There are much more lower expense methods of doing this,” states Hwang.

In the meantime, if it were adjusted for destructive usage, this specific pressure of chicanery would be simple to find, states Siwei Lyu, a teacher at the State University of New York City at Albany who studies deepfake forensics under Darpa’s program. The demonstration, while outstanding, misses out on finer information, he keeps in mind, like Marilyn Monroe’s well-known mole, which disappears as she tosses back her head to laugh. The scientists likewise have not yet resolved other difficulties, like how to correctly sync audio to the deepfake, and how to straighten out glitchy backgrounds. For contrast, Lyu sends me a cutting-edge example utilizing a more standard method: a video fusing Obama’s face onto an impersonator singing Pharrell Williams’ “Delighted.” The Albany scientists weren’t launching the technique, he stated, due to the fact that of its prospective to be weaponized.

Hwang has little doubt enhanced innovation will ultimately make it tough to differentiate phonies from truth. The expenses will decrease, or a better-trained design will be launched in some way, making it possible for some smart individual to develop an effective online tool. When that time comes, he argues the option will not always be superior digital forensics, however the capability to take a look at contextual ideas– a robust method for the general public to examine proof beyond the video that proves or dismisses its accuracy. Fact-checking, generally.

However fact-checking like that has actually currently shown a difficulty for digital platforms, particularly when it concerns acting. As Chesney mentions, it’s presently simple adequate to spot modified video, like the Pelosi video. The concern is what to do next, without heading down a domino effect to figure out the intent of the developers– whether it was satire, possibly, or produced with malice. “If it appears plainly meant to defraud the listener to believe something pejorative, it appears apparent to take it down,” he states. “However then when you decrease that course, you fall under a line-drawing problem.” Since the weekend, Facebook appeared to have actually pertained to a comparable conclusion: The Pelosi video was still being shared around the Web– with, the business stated, extra context from independent fact-checkers.

This story initially appeared on Wired.com