We have various ways of seeing what the brain is up to, from low-resolution electrodes that track waves of activity that ripple across the brain, to implanted electrodes that can follow the activity of individual cells. Combined with a detailed knowledge of which regions of the brain are involved in specific processes, we’ve been able to do remarkable things, such as using functional MRI (fMRI) to determine what letter a person was looking at or an implant to control a robotic arm.

But today, researchers announced a new bit of mind reading that’s impressive in its scope. By combining fMRI brain imaging with a system that’s somewhat like the predictive text of cell phones, they’ve worked out the gist of the sentences a person is hearing in near real time. While the system doesn’t get the exact words right and makes a fair number of mistakes, it’s also flexible enough that it can reconstruct an imaginary monologue that goes on entirely within someone’s head.

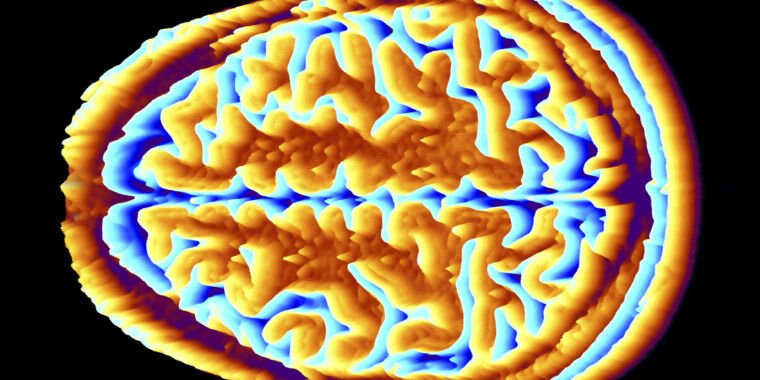

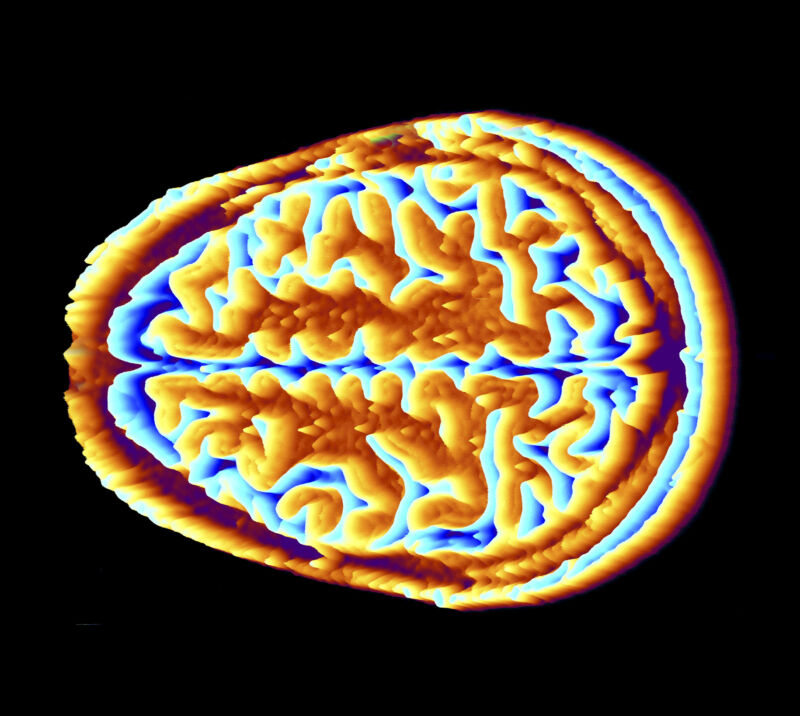

Making functional MRI functional

Functional MRI is a way of seeing what parts of the brain have been active. By tuning the sensitivity of the imaging to pick up differences in the flow of blood, it’s possible to identify areas within the brain that are replenishing their energy after having processed some information. It has been extremely useful for understanding how the brain operates, but it also has some significant limitations.

To start, the person whose brain is being imaged has to be stuffed inside an MRI tube for it to work, so it’s not useful for any real-world applications. In addition, the spatial resolution is somewhat limited, so activities that involve only a small number of cells can be difficult to resolve. Finally, the response to activity that fMRI picks up is somewhat delayed; it starts picking up a couple of seconds after the neural activity, builds to a peak, and doesn’t fade out until a full 10 seconds after the neurons were active.

This delay makes tracking a continuous activity—reading a few paragraphs, for example—very tricky because some areas that are activated by one word may partly overlap both physically and temporally with the ones that are activated by ensuing words.

The researchers behind the new work (a small team at the University of Texas at Austin) decided to skip identifying individual words and focused on developing a system that could identify a sequence of words. And they developed an astonishing data set to train the recognition system: Three individuals spent 16 hours sitting in MRI tubes while text was read to them.

What are you listening to?

The system works by constantly updating a list of candidate phrases, that are consistent with the brain activity registered by the fMRI. The computerized portion of things was based on a generative neural network language model that had been trained on the English language, which narrowed down the word combinations that it needed to consider. (It keeps the system from evaluating the possibility of users hearing “aimless hyena mauve” or something equally nonsensical.)

That still leaves a huge range of valid English phrases that the system needs to consider. To handle this in near real time, the researchers turned to something called a beam search algorithm. As the neural response to a spoken word is processed, the system creates a list of high-scoring potential matches. As the next word’s response is added, it converts these to scored lists of two-word combinations that are both good matches for the neural signals and make sense given its training in the English language. As each new response comes in from the fMRI, it continues refining these lists until it has scores for entire phrases.

By managing these as lists rather than a single answer, the system avoids throwing out a good match if it misidentifies a single word. And, by limiting things to common English usage, the system avoids getting bogged down by tracking nonsense phrases.